The interactive installation entitled InterACTE makes it possible to improvise with a virtual character whose gestures are generated by a genetic algorithm based on expressive and linguistic gestures captured on real actors (a mime, a poet, a linguist and and choir leader). The installation presents an avatar in a virtual and immersive world.

This creation was made within the framework of CIGALE research project, supported by Labex Arts-H2H with its partners: Paris 8 University laboratories INRéV and SFL (Structures Formelles du Langage), and the CNSAD (Conservatoire National Supérieur d’Art Dramatique de Paris).

The CIGALE Platform

CIGALE is an artistic and dialogical platform that explores the hybridization between human and virtual gestures in real time. This platform allows to study complementary aspects of gestures, from the linguistic field to the arts. CIGALE allows to understand the relationships between symbolic gestures (coverbal ones), expressive gestures (choral conducting and poetry) and full body gestures (mime).

4 years (2012-2015) / Cross-disciplinary project / 7 partners of the LABEX ARTS-H2H, Paris 8 University

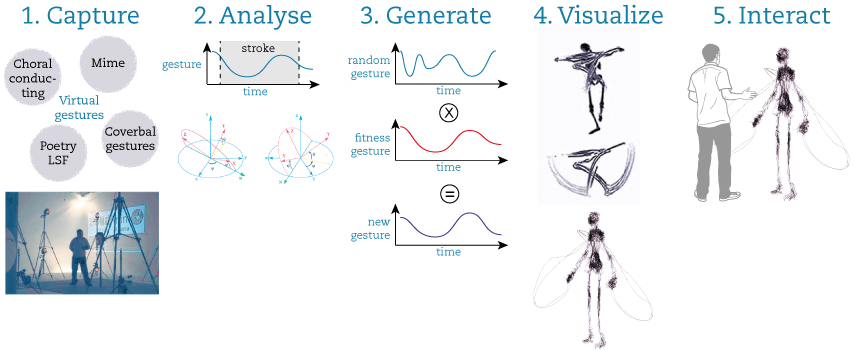

Starting from motion capture (1), we have created four databases of gestures. The platform allows to study and analyses gestures, strokes and meaning (2). We propose then to generate new gestures using genetic algorithm that converges to new gestures inspired by the ones of the database (3). In order to visualize the gestures, we can render the motion on different characters by changing body aspect (mesh), using particles effect or trails (4). At least, it’s possible to interact using gestures with the virtual characters that can answer autonomously to the user or to the audience (5).

1. Motion capture

We have created four databases of gestures, from symbolic gestures (Co-verbal and Choral conducting) to expressive gestures (Mime and Poetry in French Sign Language). The gestures were captured with four actors in a professional motion capture studio (SolidAnim) with 20 Vicon cameras.

2. Motion analysis

Motions and gestures are analyzed by the LAM and the SFL laboratories (left figure). The main purpose is to explore the gestures building a physiological model based on linguistic. Another aspect is the automatic extraction of the meaningful part of the gesture called the stroke. The results of those studies are reused in the “interaction” step of the project.

3. Generating gestures with genetic algorithm

The platform allows to generate new gestures inspired by the captured gestures of the database (i.e. fitness). The genetic algorithm developed in the lab can be applied to any joints of the virtual body. It can generate new motions on the body parts. The implementation is done in the UNITY 3D real-time engine.

4. Graphical rendering and perception

Several rendering could be applied to the virtual character such as different meshes of the virtual body, some particle effects (like smoke or light effects), or trails to visualize the motion.

In order to increase the immersion and to perceive the character as an avatar, we can interact in a virtual environment with stereoscopic 3D on a screen or in a Head Mounted Display .

5. Interaction and autonomy

The interaction with the platform between the actors or the audience with the virtual character is design to create a real dialogue. A series of experiments between real actors, filmed and analyzed, helped in designing the behavioral engine. During the interaction loop, the behavior of the actor is continuously perceived analyzed in real time (such as the orientation of his body, the speed and the rhythm of the movement using Kinect) in order the virtual character can answer or improvises appropriately. We have isolated specific behaviors such as miming, proposing a new gesture from the genetic algorithm, or waiting for the user to answer.

More info

http://cigale.labex-arts-h2h.fr/

more info: http://llinz2015.univ-paris8.fr/